Introduction

In ASIC verification, we all use a method known as Metric Driven Verification but we don’t use that term much. Instead we always refer to it as Coverage Driven Verification. Although, both the terms means the same thing to some extent. There are some metrics which I like to refer to as second order metrics that we often ignore and only focus on the trivial metrics which are what we typically cover under the names of functional and code coverage. In this article we will try to explore the second order metrics and how to include them in the verification closure. In general, we identify some important metrics and then we build the test bench to track those metrics closely. The closure of verification depends on progress of the defined metrics. Coverage is nothing but a collection of few such metrics that we track for every testbench. Especially, the code coverage which is taken for granted in every test bench as it is readily available from the EDA tools. The functional coverage on the other hand requires some dedicated planning and efforts to model and to execute.

Most of the times when we hear the term metric analyzers we think about the functional coverage. It is indeed true that a metric analyzer can hold a bunch of covergroups to model the functional coverage but that is not the only thing a metric analyzer is meant for. A Metric analyzer is a more generic component that can mean many different things and not just a place holder for covergroups. Especially, it is better suited to calculates and tracks the second order metrics in my opinon.

Second order metrics

A cover point tracks just a variable. It samples the variable at different times and then gives us information about how much the variable is tracked based on our requirements. In some cases, the metrics that we are interested cannot be tracked with a simple variable. We might need to take samples or measurements of one or more variables and then calculate the metric over a period of time. One simple example is data rate. This type of metrics is what I call as second order metrics. Mostly, these second order metrics relates to performance metrics. Imagine a DUT which is a simple round robin arbiter. To verify such a design the metrics that needs to be stressed out are how efficient the round robin arbitration is serving its clients. We can only know this after applying a lot of traffic and observing the performance of round robin algorithm over a period of time. In case of arbiter, most people quickly realize the importance of second order metrics but the same is applicable to any design. If the nature of the design is more data path centric then we most likely ignore the second order metrics and only focus on first order coverage metrics like data integrity, modes of operation etc. Regardless of the DUT, there should be efforts in planning out both first order and second order metrics during verification planning and the same should drive the test bench architecture.

3-Dimensional Approach

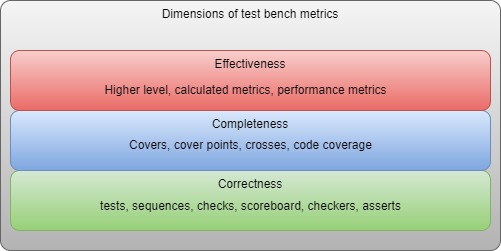

When making a new test bench, I always visualize the quality as 3 dimensional. At every step of building the test bench these three dimensions must be kept in mind. The closure is measured on how deep we can reach in each of these 3 dimensions. The 3 dimensions are Correctness, Completeness and Effectiveness. Let us understand each of these dimensions mean.

Correctness

Correctness will tell us whether a feature of the design is implemented as expected or not. Most engineers focus on this single dimension alone and they build the test bench to explicit verify the correctness of all the features that the design implements. The test cases, sequences, scoreboards, checkers, asserts and other analysis components are all built for this correctness. To understand it better if we are verifying a CPU, then we need to verify different operations performed by it like ADD, SUB, MUL etc. If we are verifying AXI based design then we need to verify that AXI protocol is followed correctly, data integrity of the design, write/read operation etc. There might not be any need of an explicit metric analyzers in the case of correctness as all the tests, sequences and several other components are explicitly monitoring the correctness of the features.

Completeness

The second dimension is completeness. Correctness alone is not enough to verify the designs. Each feature that the design implements has a range of inputs that it supports. It is not enough to just check the feature correctness but we need to ensure the correctness holds true for the full range of the inputs that a particular features supports. Sometimes, a single feature can operate on different modes and the feature correctness needs to checked on full range of the modes it supports. This aspect of verifying the correctness across its full range is called completeness. In other words we know that a feature is working with the help of a test or a checker but we also want to know how ‘Thoroughly’ the feature is working. The correctness is what we measure with the code coverage and functional coverage. While the code coverage tells us whether every line, branch, state, block of the code are exercised by the tests, the functional coverage will tells us whether variables take a particular value of interest, whether interesting series of events occurred in a simulation, specific state transitions, crossing of different modes, crossing of dependent features etc. If we take the example of an AXI design then some of the interesting items to look for are back to back read or write transactions, different burst types, burst lengths and sizes, flow controls, covering full range of addresses and id’s, Narrow transfers, sparse transactions and so on. All these are different metrics that we want to keep track of. The metric analyzers can be created to hold all the relevant covergroups at one place. Most verification engineers when building the test benches will plan the verification architecture based on both correctness and completeness largely due to awareness of their importance in the overall quality of the verification.

Effectiveness

Except for few, almost everyone ignores this third dimension called effectiveness. The effectiveness is a measure of how effectively the design is performing a feature correctly and completely. This is a performance measure. Just like coverage metrics which can measure completeness there are specific metrics that measure the effectiveness or performance of the design. Unlike the coverage metrics which are relatively easy to identify and implement, the performance metrics are neither trivial to identify nor easy to implement. May be that’s why most people ignore this dimension altogether while building the testbenches. Some of the common metrics include latency, throughput, bandwidth, channel utilization, round trip time etc. Most of the performance metrics are calculated over period of time after observing lot of data path traffic. Let’s take the same example of AXI design. The metrics could be latency of the design, max data rate, max response delay, average data rate, depth of reordering etc. In my opinion, metric analyzers are best suited for these kind of metrics. The metric analyzer measures distinct metrics from transactions it receives and at the end of the test will report the calculated metrics. Checks can be implemented to verify whether the calculated metrics are with in the range of specification. These performance metrics needs to be identified while planning and should be kept in mind while building the test bench as well as test cases.

A Generic Metric Analyzer

We talked enough. Now its time to give some code examples that can be readily used. I will try to show a very simple and generic template for a metric analyzer that can be reused across many projects. Further, this class can be customized to your own project needs. The basic idea is to create a simple class called metric to capture any metric at different points in time. Store the captured values in an array and then perform required operations on the array like sum, average, min, max etc. We can then add a wrapper class called metric analyzer which is a collection of named metrics. Implementation below should give you a better idea.

Metric analyzer example

class metric extends uvm_object;

`uvm_object_utils(metric)

protected real measurements[$];

protected real minimum;

protected real maximum;

function new(string name = "metric");

super.new(name);

endfunction : new

virtual function void measure(real value = 1);

measurements.push_back(value);

endfunction : measure

virtual function void remove_measurement();

if (measurements.size() > 0) begin

measurements.pop_back();

end

endfunction : remove_measurement

virtual function int total_measurements();

return measurements.size();

endfunction : total_measurements

virtual function int sum();

real total = 0;

foreach (measurements[i]) begin

total += measurements[i];

end

return total;

endfunction : sum

virtual function real mean();

int size = measurements.size();

if (size == 0) return 0;

return sum() / size;

endfunction : mean

virtual function real min();

if (measurements.size() == 0) return 0;

minimum = measurements[0];

foreach (measurements[i]) begin

if (measurements[i] < minimum) minimum = measurements[i];

end

return minimum;

endfunction : min

virtual function int max();

if (measurements.size() == 0) return 0;

maximum = measurements[0];

foreach (measurements[i]) begin

if (measurements[i] > maximum) maximum = measurements[i];

end

return maximum;

endfunction : max

endclass : metric

// metric_analyzer.sv file

class metric_analyzer#(type T) extends uvm_subscriber#(T);

`uvm_object_param_utils(metric_analyzer#(T))

protected metric metrics[string];

function new(string name = "metric_analyzer", uvm_component parent = null);

super.new(name, parent);

endfunction : new

virtual function void create_metric(string name);

if (metrics.exists(name)) begin

`uvm_warning("CREATE_METRIC", $sformatf("Metric with name %s already exists.", name));

end else begin

metrics[name] = new(name);

end

endfunction : create_metric

virtual function metric get_metric(string name);

if (!metrics.exists(name)) begin

`uvm_error("GET_METRIC", $sformatf("Metric with name %s does not exist.", name));

return null;

end

return metrics[name];

endfunction : get_metric

virtual function void measure(string name, real value = 1);

metric m = get_metric(name);

if (m != null) begin

m.measure(value);

end

endfunction : measure

virtual function void remove_measurement(string name);

metric m = get_metric(name);

if (m != null) begin

m.remove_measurement();

end

endfunction : remove_measurement

virtual function real sum(string name);

metric m = get_metric(name);

if (m != null) begin

return m.sum();

end

endfunction : sum

virtual function real mean();

metric m = get_metric(name);

if (m != null) begin

return m.mean();

end

endfunction : mean

virtual function real min();

metric m = get_metric(name);

if (m != null) begin

return m.min();

end

endfunction : min

virtual function real max();

metric m = get_metric(name);

if (m != null) begin

return m.max();

end

endfunction : max

virtual function void print();

metric m;

string line = "Name\t\tTotal\t\tSum\t\tMin\t\tMax\tAverage";

`uvm_info("METRIC_ANALYZER", line, UVM_NONE);

foreach (metrics[name]) begin

m = metrics[name];

$sformat(line, "%s\t%d\t%d\t%d\t%d\t%f", name, m.total_measurements(), m.sum(), m.min(), m.max(), m.mean());

`uvm_info("METRIC_ANALYZER", line, UVM_NONE);

end

endfunction : print

function void write(T t);

endfunction : write

endclass : metric_analyzer

// metrics_pkg.sv file

package metrics_pkg;

import uvm_pkg::*;

`include "uvm_macros.svh"

`include "metric.sv"

`include "metric_analyzer.sv"

endpackage: metrics_pkg

// top.sv file

`include "metrics_pkg.sv"

module top();

import uvm_pkg::*;

import metrics_pkg::*;

`include "uvm_macros.svh"

initial begin

metric_analyzer#(int) i_metric_analyzer;

i_metric_analyzer = metric_analyzer#(int)::type_id::create("metric_analyzer", null);

i_metric_analyzer.create_metric("latency");

i_metric_analyzer.create_metric("datarate");

i_metric_analyzer.create_metric("bandwidth");

// Add some dummy values. In reality the measuring happens in write method of metric analyzer

for (int i = 1; i < 20; i++) begin

i_metric_analyzer.measure("latency", i*2);

i_metric_analyzer.measure("datarate", i*200);

i_metric_analyzer.measure("bandwidth", i*2000);

end

i_metric_analyzer.print();

end

endmodule : topTo use this class. We need to first extend metric_analyzer class and define our metrics in constructor of the derived class. Notice that the metric analyzer is extended from the uvm_subscriber. We just need to create an instance of derived metric_analyzer in the environment and connect it to uvm_analysis_port to receive the transactions. The write methods of metric_analyzer will call measure methods on different metrics. Few trivial operations like sum, average, min and max are shown for demonstration but can be extended any custom operation. I hope this simple template give you an idea of how to create and use the metric analyzer.

See you in the next one.

The above code in available as a repo here: https://github.com/anupkumarreddy/generic_metric_analyzer

Edaplayground link is here:

https://edaplayground.com/x/Cktb